Authors: Jichen Feng, Yifan Zhang, Chenggong Zhang, Yifu Lu, Shilong Liu, Mengdi Wang

Venue: arXiv preprint arXiv:2512.23676 (2025)

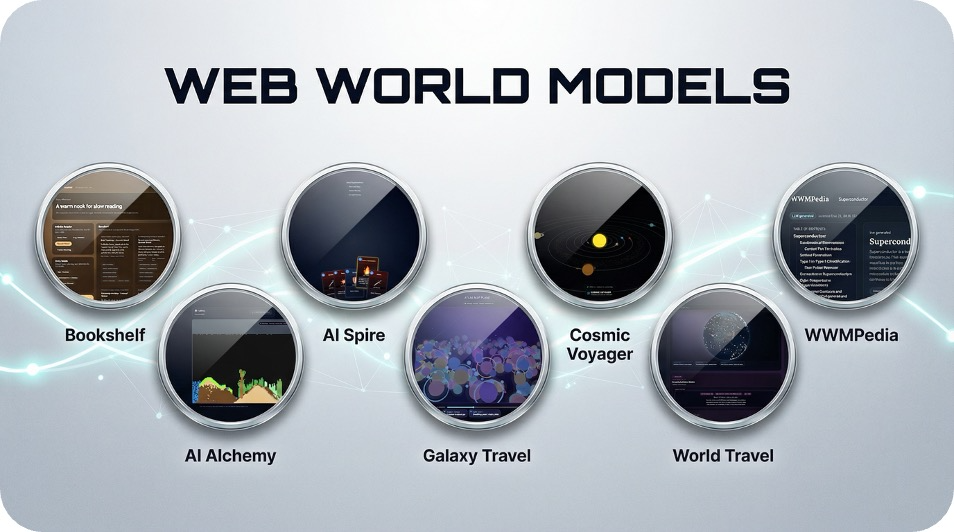

We propose Web World Models (WWMs): persistent, open-ended environments where the world state and rules (“physics”) live in ordinary web code, while large language models generate narrative context and high-level actions. We implement several WWMs on a realistic web stack (e.g., an infinite travel atlas grounded in real geography, procedural galaxy explorers, encyclopedic/narrative worlds, and game-like simulations) and distill practical design principles for making worlds both controllable and scalable.

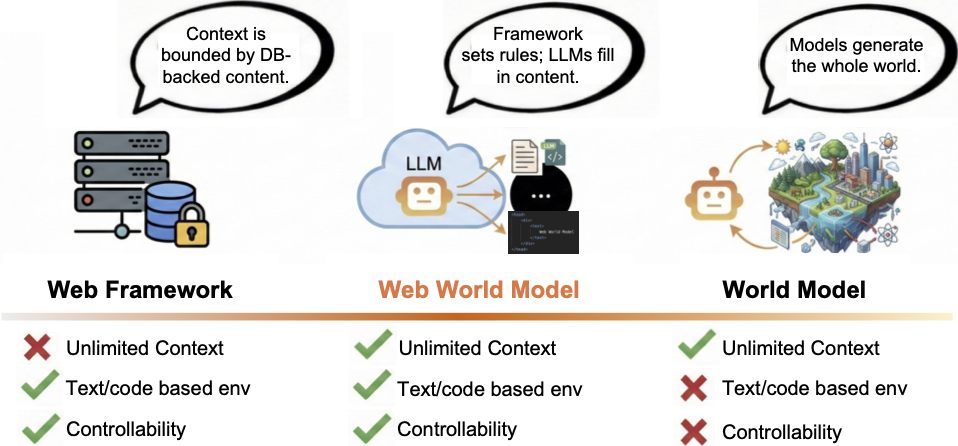

Fig. 1: Left: Traditional Web Frameworks fix context in databases, limiting scalability. Center: The Web World Model (Ours) decouples logic from content, generating unlimited context via LLMs upon a code-based physics layer without heavy data storage. Right: Fully generative world models can produce unlimited context and rich video/3D content, but when the world is constructed primarily through generation, it is harder to maintain a fixed, deterministic global framework, reducing controllability.

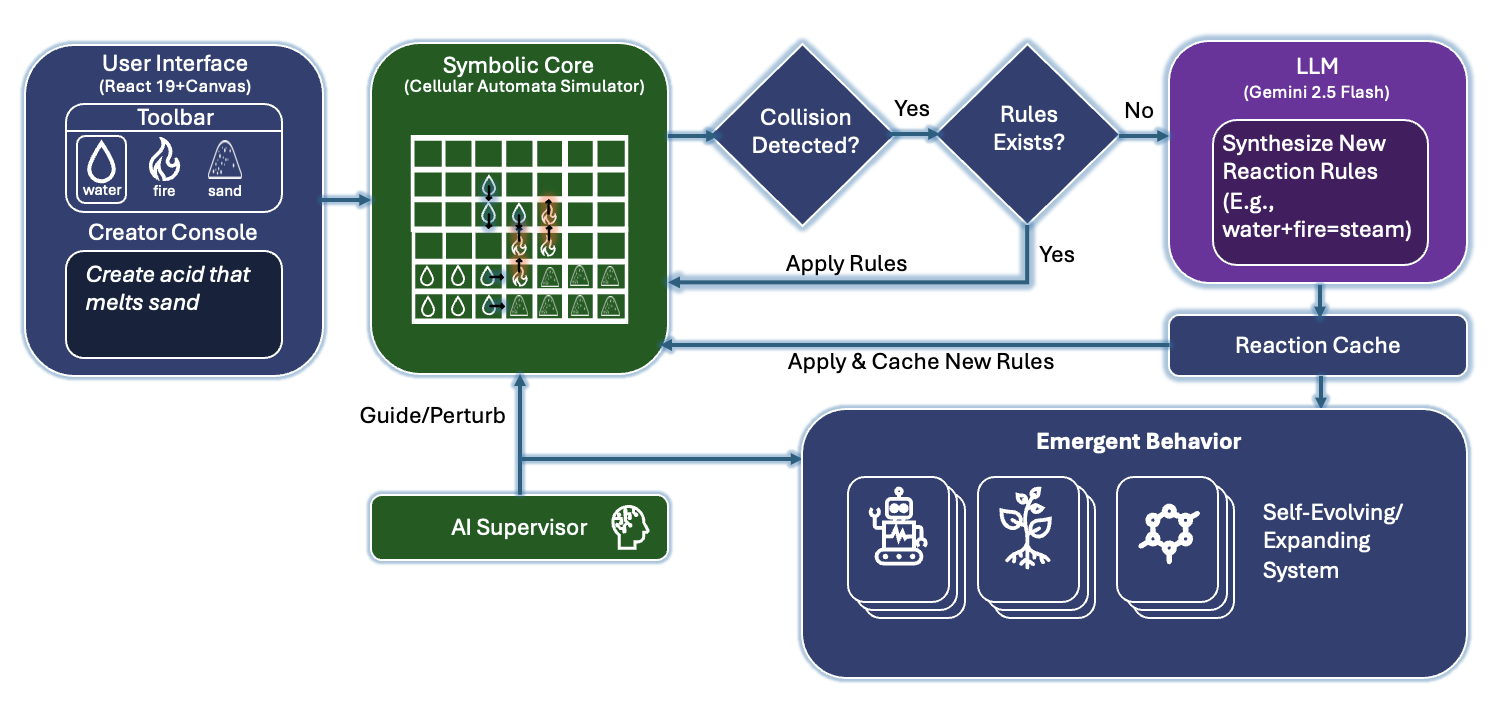

Fig. 2: Neural-symbolic architecture of AI Alchemy. A React+Canvas user interface (toolbar and natural-language Creator Console) injects user-defined materials into a symbolic cellular-automata “falling-sand” simulator. Upon particle collisions, the engine applies existing reaction rules when available; otherwise, it queries an LLM (Gemini Flash) to synthesize a schema-constrained reaction outcome, which is cached and immediately integrated into the update loop. An optional AI Supervisor monitors the canvas and guides/perturbs the system, enabling controlled emergent behavior in a self-expanding sandbox.

@misc{feng2025webworldmodels,

title = {Web World Models},

author = {Feng, Jichen and Zhang, Yifan and Zhang, Chenggong and Lu, Yifu and Liu, Shilong and Wang, Mengdi},

year = {2025},

eprint = {2512.23676},

archivePrefix= {arXiv},

primaryClass = {cs.AI},

url = {https://arxiv.org/abs/2512.23676}

}